Table of Contents

ProMC User's Manual

(written by S.Chekanov, ANL)

ProMC is a package for file input and output for structural event records (such as Monte Carlo or data events). The main features include:

- Compact file format based on a content-dependent “compression” using Google's protocol buffers. Although we use the word “compression”, it it just a compact binary format (see the discussion below and here). ProMC is not based on a compression algorithm (like gzip). Therefore, no CPU overhead due to compression/decompression is expected.

- Self-describing file format. One can generate C+/Java/Python code to read or write files from existing ProMC data file and make future modifications without the language-specific code used to generate the original file.

- Multiplatform. Data records can be read and write in C++, Java and Python. PHP can be used to access event records.

- Forward and backward compatible binary format.

- Random access. Events can be read starting at any index. Individual events can be read from a remote ProMC file using random access capabilities.

- Optimized for parallel computation.

- Metadata can be encoded for each record which will allow fast access of interesting events

- Logfile can be embedded into the ProMC files. Useful for Monte Carlo generators.

- No external dependencies. The library is small and does not depend on ROOT or any other libraries.

- Well suited for archiving data. In addition of being very compact, any future modification can be made by generating analysis code using the “self-describing” feature.

ProMC(“ProtocolBuffers” MC) is based on Google's Protocol Buffers, language-neutral, platform-neutral and extensible mechanism for serializing structured data. It uses “varints” as a way to store and compress integers using one or more bytes. Smaller numbers take a smaller number of bytes. This means that low energetic particles can be represented by a smaller number of bytes since the values to store 4-momenta are smaller compared to high-energetic particles. This is important concept to store events with many soft particles (“pileup”) in the same event record since they use less disk space.

This project is tailored to HEP ANL BlueGene/Q project, since can provide a simple and efficient way to stream data from/to BlueGene/P.

Why ProMC?

The main idea behind ProMC is to use “content-dependent” compression to store particles depending on their importance. An 14 TeV pp collision event with 140 pileup soft events can have more than 10k particles. Most of them have low pT (“soft”). If we can encode 4-momenta using integer values, then soft particles can be represented by smaller values compared to most interesting (“hard”) particles. If we encode this information using Protocol buffers “variants”, we can use less bytes to store soft particles from pileup. Read Protocol-buffers Encoding.

However, Protocol buffers is still not sufficient, since it can be used write and read separate “messages” (i.e. “single events”). ProMC is designed to store multiple “messages” (or events in HEP terminology“) in a file using a platform neutral way. It also constructs a header for events and organize “messages” in a form suitable for event Monte Carlo records.

Example: A typical HEPMC file size for 100 ttbar events with 140 pileup events (14 TeV) is 1,230 MB. Gzipping this file reduces the file size to 445 MB (in which case it is impossible to read it). The main objective is to store such events in a platform-independent file of size of about 300 MB and still be able to read such data (even using random access). As you will see below, such goal has been achieved using the ProMC package.

About the data compression

When we say “compression”, we typically mean some compression algorithm. In ROOT, up to 50% of CPU is spent on compression/decompression of data. ProMC does not use any algorithm to compress or decompress files. It just streams data into a binary format without CPU overhead.

Other approaches

- HepMC is a poplar, platform independent way to store event record. The most common approach is save records in ASCII files. A typical size of data for 100 pp collision events (14 TeV) with 140 pileup events is 1.2 GB. A gzip compression can reduce it to 450 MB (mainly for storage). But there is no way to read and write compressed files. Despite large size of HEPMC files, the main advantage is that it's multiplatform and human readable.

- StdHep C++ library is no longer supported. It uses a gzip compression, bit not at the byte levels as in case of vaints. The format is not multiplatform.

- LHEF is another event record. It is XML based and inherits problems which were solved by Google. Protocol buffers have many advantages over XML for serializing structured data: 3 to 10 times smaller (assuming no zip compression) and 20 to 100 times faster.

- Another way to store is to use ROOT. The format is not fully multiplatform (although attempts were done to read using Java). ROOT uses the standard standard “gzip” compression and fixed-precision values. This means that soft and hard particles takes same storage since represented by a fixed number of bytes.

Particle's representation

Each event of the ProMC library is a “ProtocolBuffer” message. Float values (pX,pY,pZ,M) are encoded as “int64” integers. In such representation, 0.01 MeV is minimum allowed energy, while 24 TeV is maximum allowed energy.

Here is a mapping table:

| Energy | Representation | How many bytes in encoding |

|---|---|---|

| 0.01 MeV | 1 | 1 bytes |

| 0.1 MeV | 10 | 1 bytes |

| 1 MeV | 100 | 2 bytes |

| 1 GeV | 100 000 | 4 bytes |

| 1 TeV | 100 000 000 | 8 bytes |

| 20 TeV | 2000 000 000 | 8 bytes |

Thus, a 4-momentum of a soft particle (~ MeV) can be represented by a reduced number of bytes, compared to fixed length encoding. For a typical pT spectra (falling distribution), this means that the bulk of particle spectra at low pT is compressed more effectively, than for particles at the high-pT tail. This compression depends on the pT spectra.

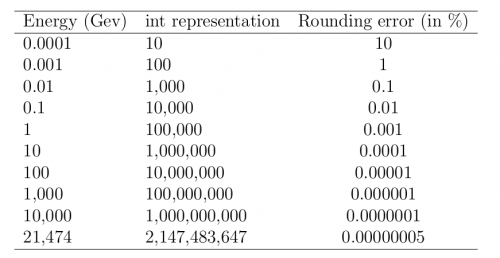

The mapping between energy values (in GeV) and C++/JAVA integer representation using the int64 varint type of the Protocol Buffers library and the multiplicative factor 100000 in the default ProMC varint conversion. The last column shows the rounding errors for the conversion.

There are other places where the Google's compression is efficient for MC event records. For example, partons typically have small integer values of:

- PDG_ID - PDG ID

- status - status code

- daughter1 - 1st daughter

- daughter2 - 2nd daughter

- mother1 - 1st mother

- mother2 - 2nd mother

thus they are compressed more effectively using “varints” than final state or exotic particles with large PDG_ID number. Also, light particles (partons) will be compressed more effectively due to their small mass.

Another place where ProMC tries to optimize storage is to set masses for most common particles as a map in the header message of the record. For example, masses of pions and kaons can be set to 0 value (1 bit). During the reading, the masses are restored using the map stored in the header file.

ProMC record layouts

A typical ProMC has 4 major ProtoBuff messages:

- File description message (“file metadata”) with timestamp, version, Nr of events, description.

- A header “message” to keep MC event metadata. It keeps global information about initial colliding particles, PDF, cross section, etc. It also keeps track of which units are used to convert floats to integers. In addition, it keeps information on PDG particles (particle ID, masses, names, charges, etc.). The header is encoded as a separate Proto buffer message an is suppose to provide the necessary description of events.

- Events as separate Protocol buffer messages streamed in multiplatform binary form using bytes with variable length. Each messages is composed on “Event information” and “Particle information”. See the description below.

- At the very end, there is a “Statistics” Proto buffer message which can be have information on all events, accumulated cross sections etc. This is optional record in ProMC.

The ProMC is based on a random access, i.e. you can read the “Header”, “Statistics”, and any event record from any place in your program.

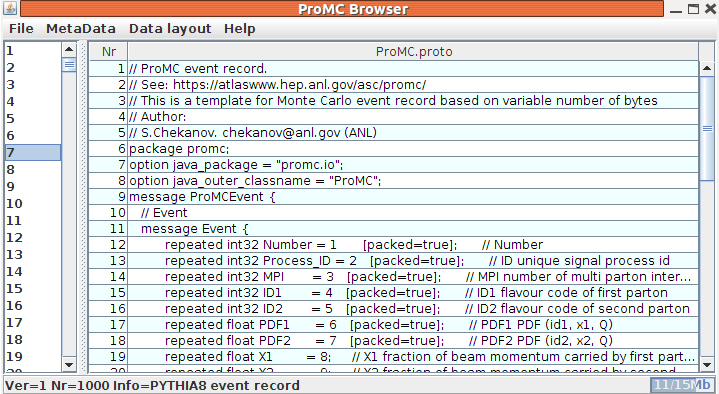

The data layouts inside ProMC files are implemented using the Google's Protocol Bufffes template files. Look at the language guide protocol-buffers used for such files. Such files can be used to generate analysis code in any programming language (C++,Java,Python.). There are a few files used to create and read ProMC files:

- The description message is ProMCDescription.proto

- The header of the file record is ProMCHeader.proto. Typically the header file created before the main loop ever events.

- The MC event record (repeatable) ProMC.proto

- The statistics of the generated events is stored in ProMCStat.proto. This record is inserted last, after a MC accumulated statistics and final cross section calculation.

These are the files that are shipped with the default installation and suitable to keep truth MC information. A more complicated data layouts are given in examples/proto directory (to keep jets, leptons, jets with constituents etc.).

The proto files (ProMCHeader.proto, ProMC.proto, ProMCHeader.proto) can be embedded to the ProMC file record, making the file “self-describing”. It is recommended to embed such files, since one can later generate analysis code in any programming language using these files. This is ideal for preserving data and make future modifications without knowing the analysis code used to create the original data. See the tutorials for examples.

To embed the layout templates inside a ProMC file, simply make a directory “proto” and copy (or link) these files. In case if such files are embedded, you can retrieve proto files and generate C++/Java/Python code which will read the data using the data structure used to create the file.

Optionally, you can also include a log file to the ProMC files. If you have a file ”logfile.txt“ in the same directory where you write ProMC files, it will be included to the ProMC record (and compressed).

Available ProMC commands

| ProMC Commands | Description |

|---|---|

| promc_info <file> | analyzes show the description |

| promc_browser <file> | Start a Java browser and look at the records |

| promc_browser <file URL> | As before, but using http/ftp |

| promc_dump <file> | dump all the information |

| promc_extract <file> <out> N | extracts N events and save them to out.promc |

| promc_proto <file> | extracts self-description of a ProMC files and generates “proto” directory describing data inside the file |

| promc_code | generates source files using “proto” directory. C++ code is generated in the directory src/, while Java code in the directory java/src |

| promc_log <file> | extracts the log file “logfile.txt” (if attached to the ProMC file) |

| promc_split <ProMC file> N | splits a ProMC files into N files in the directory “out” |

Certain program will need to be recompiled if the original event structure has changed. For example, promc_split program is not installed during the installation step and needs to be recompiled manually:

cp -r $PROMC/examples/promc_split promc_proto file.promc # extracts layout promc_code # generates header files in src/ make # compiles promc_split file.promc 7 # splits the original file into 7 files in the directory out/ with the same number of events

There are also other ways to work with the ProMC files. Here is a small Python script which reads a ProMC file and extracts self-description (including embedded proto files and logfile)

import zipfile z = zipfile.ZipFile("out/output.promc", "r") print z.read("promc_nevents") # Nr of events in the file print z.read("promc_description") # description print z.read("ProMCHeader.proto") # embedded ProtoBuffer templates used to describe messages print z.read("ProMC.proto") print z.read("ProMC.proto") print z.read("logfile.txt") # embedded logfile for filename in z.namelist(): # loop over all entries print filename #bytes = z.read(filename) #print len(bytes)

This a Python script. You can also read this info in Java and PHP, as long as you can read an entry inside a zip file.

Using ZIP to extract events

Since ProMC is a ZIP archive with binary Protocol Buffers messages, you can work with them as with any ZIP file.

Here is an example to how to list all entries inside the ProMC file:

wget http://atlaswww.hep.anl.gov/asc/promc/download/Pythia8.promc unzip -l Pythia8.promc

You can uncompress events into files as “unzip Pythia8.promc”. You can extract any event, say event “100”, as:

unzip -p Pythia8.promc 100 > 100.event unzip -p Pythia8.promc ProMC.proto > ProMC.proto

This example saves the event “100” in a file “100.events”. The second line extract ProtocolBuffer template used to pack the event into the file using the ProtocolBuffers.

Similarly, one can look at the attached logfile and print the number of stored events as:

unzip -p <ProMC file> logfile.txt unzip -p <ProMC file> promc_nevents

In these examples, we send the contents of the files “logfile.txt” and “promc\_nevents” via pipe into shell console.

Available conversions

| ProMC Commands | Description |

|---|---|

| hepmc2promc <HEPMC input> <ProMC output> “description” | converts HepMC file to ProMC file |

| promc2hepmc <ProMC input> <HepMC output> | converts ProMC file to HEPMC file |

| stdhep2promc <StdHEP input> <ProMC output> | converts StdHEP file to ProMC file |

| promc2root <ProMC input> <ProMC output> | converts ProMC file to ROOT |

| promc2stdhep <ProMC input> <STDHEP output> | converts ProMC file to STDHEP |

| promc2lcio <ProMC input> <LCIO output> | converts ProMC file to LCIO |

| lhe2promc <LHE input> <ProMC output> | converts LHEF (TXT) file with MC events to ProMC |

| txt2promc <TXT input> <ProMC output> | converts TXT file with MC events to ProMC |

| java -cp .:browser_promc.jar hepsim.MixPileup pN signal.promc minbias.promc output.promc | Pileup mixer. Mix N random events using a Poisson distribution with signal events |

Note that the conversion tools (hepmc2promc,promc2hepmc) are build during the installation (see the installation instruction). The tool that converts STDHEP to PROMC (stdhep2promc) needs to be compiled inside examples/stdhep2promc) directory. To build stdhep2promc, so this:

cd examples/stdhep2promc cd stdhep/ make cd .. make

The converts STDHEP to PROMC as:

stdhep2promc file.stdhep file.promc "description" "Nr events" "cross section in pb" "error on cross section".

ProMC to ROOT

As shown before, “promc2root” converts ProMC files to ROOT. Let us give an example:

cp -rf $PROMC/examples/promc2root . cd promc2root make prepare make # compile converter wget http://mc.hep.anl.gov/asc/hepsim/events/pp/100tev/qcd_pythia8/pythia100qcd_001.promc ./promc2root pythia100qcd_001.promc pythia100qcd_001.root

This will generate pythia100qcd_001.root file with the needed branches. The ROOT files are typically 30-50% bigger than ProMC files and the processing time of ROOT files is larger. See the benchmarks later.

Using C++ to write/read ProMC

An example which shows how to read such PROMC file can be found in “examples/random/reader.cc”. Starting from Pythi8 version 8180, ProMC is included into the Pythia8 package. See the example “main46.cc” in the directory “examples” of The Pythia8. There are two possible options to initialize ProMC:

- Here we open ProMC for writing:

ProMCBook* epbook = new ProMCBook("file.promc","w");

Event will be written to the disk after each “write()” call. The total number of events cannot be larger than 65k. After this limit, ProMC will attempt to close the file. Use this option to write large events, but limiting their number to 65k.

- Another option is to write events “in memory”:

ProMCBook* epbook = new ProMCBook("file.promc","w",true);

With this option, all events are accumulated inside the memory, and then they are written to the disk after the “close()” statement. Use this option to write “small” size events, such as those from NLO and parton-level generators. There is no limit on how many events are stored, since this option uses “zip64”.

- To read events, use this option:

ProMCBook* epbook = new ProMCBook("file.promc","r");

ProMC determines the number of events and use the appropriate library. If the number of events larger than 65k, it will use zip64 library (slightly slower for reading).

ProMC files from FORTRAN MC

Kyle Strand (summer 2013), Ed May (ANL)

ProMC files can be filled using C++ (see the examples using PYTHIA8), Java, Python and FORTRAN. FORTRAN is still popular language for some Monte Carlo models, thus an effort was put to develop a program which fills ProMC files using FORTRAN-based models.

A program called “FortranPROMC” was developed by Kyle Strand (summer 2013) which helps to fill ProMC files using FORTRAN. This program uses PYTHIA6 as examples. The program can be downloaded from the ProMC download area HEPFORGE.

Test it as. Untar the package and:

cd FortranProMC ln -s $PROMC/proto/promc proto # make sure the files are self-describing make pyt.exe > logfile.txt 2>&1 # log file will be attached to ProMC

You will see “Pythia6.promc” with events. Use the browser to look inside.

There are 2 versions of FortranPROMC:

- FortranPROMC-1.0 was designed for Linux (single server installation)

- FortranPROMC-1.1 has also a MPI interface and can be compiled on BlueGene/Q

FortranPROMC-1.1 is the most recent version developed by E.May and you are encouraged to use it. The download link are HEPFORGE or you can copy from FortranProMC-1.1.tgz.

Random access

You can extract a given record/event using a random access capabilities of this format. This is trivial to do in Java. For C++ example, check the code in “examples/random_access”. Type make to compile it and run the code. You can see that we can extract the needed event using the method “event(index)”.

Reading data remotely

You can stream data from a remote server without downloading ProMC files. The easiest is to to use the Python reader (see the example in examples/python). Below we show to to read one single event (event=100) remotely using Python:

- file.py

# Shows how to read a single event from a remote file. S.Chekanov import urllib2, cStringIO, zipfile url = "http://mc.hep.anl.gov/asc/snowmass2013/delphes36/TruthRecords/higgs14tev/pythia8/pythia8_higgs_1.promc" try: remotezip = urllib2.urlopen(url) zipinmemory = cStringIO.StringIO(remotezip.read()) zip = zipfile.ZipFile(zipinmemory) for fn in zip.namelist(): # print fn if fn=="100": data = zip.read(fn) print "Read event=100" except urllib2.HTTPError: print "no file"

In this example. “data” represents a ProMC event record. Look at the example in the example in examples/python how to print such info.

ProMC Data Browser

You can look at events and other information stored in the ProMC files using a browser implemented in Java. It runs on Linux/Windows/Mac without any external libraries. First, get the browser:

wget http://atlaswww.hep.anl.gov/asc/promc/download/browser_promc.jar

And run it as (it assumes Java7 and above. Check it as “java -version”, it should show 1.7.X version)

java -jar browser_promc.jar

Now we can open a ProMC file. Let's get an example ProMC file which keeps 1,000 events generated by Pythia8:

wget http://atlaswww.hep.anl.gov/asc/promc/download/Pythia8.promc

On Windows/Linux, one can open this file in the browser as: [File]→[Open file]. Or you can open it using the prompt:

java -jar browser_promc.jar Pythia8.promc

To read data using the network is also possible (http/ftp):

java -jar browser_promc.jar http://atlaswww.hep.anl.gov/asc/promc/download/Pythia8.promc

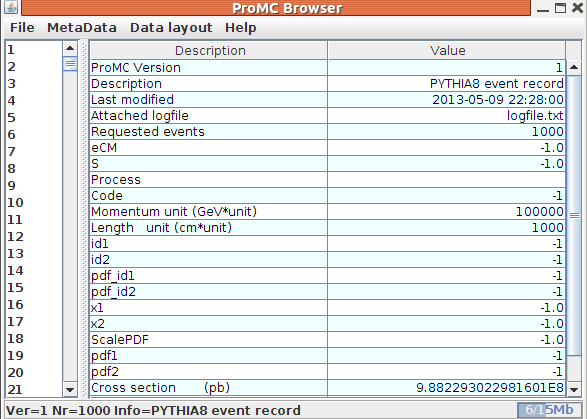

This opens the file and shows the metadata (i.e. information stored in the header and statistics records):

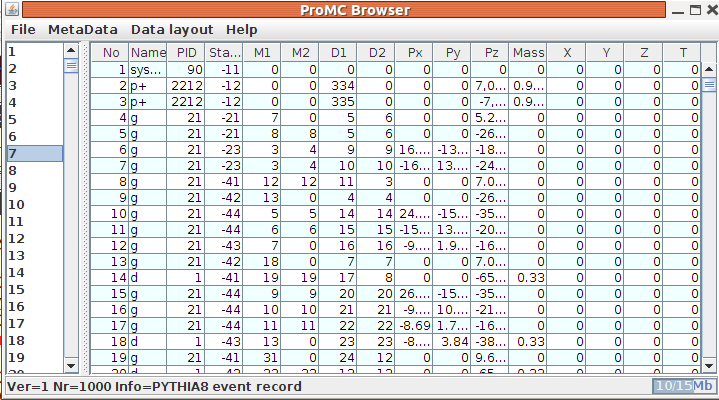

On the left, you will see event numbers. Double click on any number. The browser will display the event record with all stored particles for this event (PID, Status,Px,Py,Pz, etc).

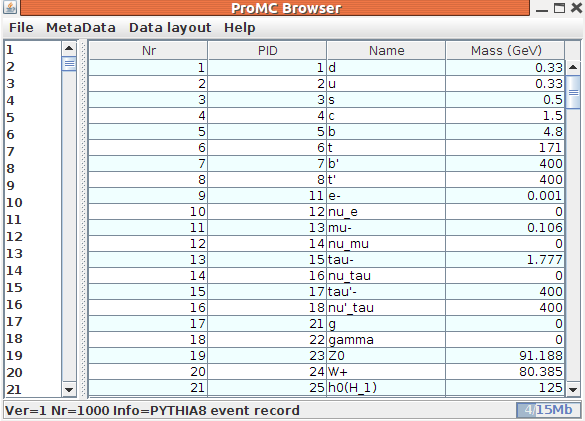

You can access metadata on particle data, such as information on particle types, PID and masses using the [Metadata]→[Particle data] menu. This record is common for all events (ProMC does not store particle names and masses for each event).

If the ProMC file was made “self-describing” and stores templates for proto layouts used to generate analysis code, you can open the “Data layout” menu:

This information can be used to generate analysis code and make future modification to the existing file. Use “promc_proto” command to extract such files, and “proto_code' to generate the analysis code. See the tutorial section.

You can look at event information (process ID, PDF, alphaS, weight) if you navigate with the mouse to the event number on the left, and click on the right button. You will see a pop-up menu. Select “Event information”.

If the ProMC package is installed, you can use a shorter command to lanch the browser:

promc_browser Pythia8.promc

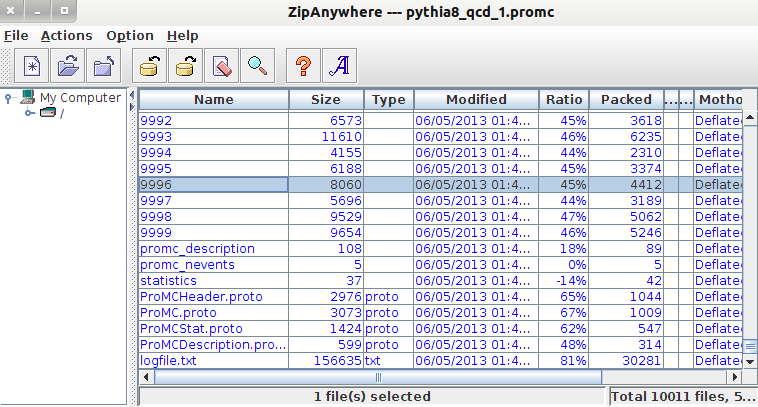

ProMC File Browser

To view ProMC encoded entry, use a browser which can help to see what is inside ProMC files.

wget http://atlaswww.hep.anl.gov/asc/promc/download/zipany.jar java -jar zipany.jar Pythia8.promc

It will pop-up a GUI with the entries inside the file. This tool is called ZipAnywhere and it has a license for non-commercial usage.

This tool is useful for:

- To view how the data records are organized

- To see size of each event and compression

- To view certain metadata which encoded as strings. For example, you can view logfile.txt, promc_description, promc_nevents, and platform-neutral proto files.

Generally, you cannot encode events since this requires external java library. Of course, you can also unzip the ProMC file (this may create very large number of files!)

Using Java and Java API

ProMC files can be read using Java without native C++ libraries. See the tutorials. You need only a single jar file (browser_promc.jar) to access all information inside a ProMC file. Look at the tutorials which show how to use it inside a Java program. In addition, you can browser the events with the same jar file:

wget http://atlaswww.hep.anl.gov/asc/promc/download/browser_promc.jar java -jar browser_promc.jar file.promc

The Java API of the part which access info inside the ProMC files is here. But you only need a few classes if you use the default data layout shipped with the ProMC.

- HepSim API for FileMC, FileNLO and browser.

- ProMCDescription - description

- ProMCHeader header information (beginning of file)

- ProMCEvent.Event - keep info on separate events

- ProMCEvent.Particles - keeps particles

- ProMCStat - statistics info (end of file)

If you create a different data layout, you can generate Java API yourself.

Checking file consistency

Since ProMC is zip archive, you can check files as:

unzip -l file.promc

which will list all ProtocolBuffers entries. You can also view information about the files as:

promc_info file.promc

(this command assumes that ProMC library is installed). Another command is:

promc_dump file.promc

which, similar to “unzip”, lists all entries.

Useful environmental variables

You can set a number of environmental variables to simplify input and output. Note that “PROMC” variable should always be set, while other variables are optional:

- PROMC_OUT - if it is set, the output ProMC file will be in the directory specified by “PROMC_OUT”, i.e. $PROMC_OUT/output.promc.

- PROMC_PROTO - if it is set, ProMC will look for *.proto description files inside the directory given by $PROMC_PROTO/proto

- PROMC_LOG - if it set, ProMC will attach the “logfile.txt” from $PROMC_LOG/logfile.txt

Visualizing data

You can plot histograms for the desired variables using several approaches:

- Use ROOT classes and methods if you read data in C++

- Use ROOT classes and methods if you read data in Python. Use PyROOT. You can also use any other visualization framework in Python, such as MatPlotLib

- Use DataMelt classes and methods if you read data using Java

Using with fast simulation

Use Delphes fast detector simulation program to process the MC events. Delphes can read ProMC files directly using “readers/DelphesProMC.cxx” inside the Delphes package.

Here are the steps to do fast detector simulations using ProMC files from the HepSim repository:

- Compile and setup ProMC, such that the variable $PROMC is defined.

- Download Delphes-3.0.12.tar.gz (or higher), untar and compile it as: ”./configure; make”. This will create the converter “DelphesProMC”

- Correct input card “examples/delphes_card_ATLAS.tcl” and remove the line “TauTagging”. We do not want to use it since it searches for mother particles. Since ProMC files are typically slimmed after removing some unstable soft particle, Delphes will fail on this line.

- Then create ROOT file with the fast detector simulation:

wget http://mc.hep.anl.gov/asc/hepsim/events/pp/100tev/higgs_pythia8/pythia100higgs_001.promc ./DelphesProMC examples/delphes_card_ATLAS.tcl pythia100higgs_001.root pythia100higgs_001.promc

Using with NLO programs

ProMC is a convenient data format to save unweighted events from NLO program. See the data layout example in example/proto/mcfm directory. The ProMC is easy to deploy on BlueGEne/Q and other supercomputers where, often, CERNLIB (for NTUPLES) and ROOT (for ROOT trees) do not exist. For NLO, you can reduce information to be stored for output particles.

See the NLO data description here: ProMC.proto - description file for NLO.

NLO has typically a few particles with little information about them. Instead, we need substantial information about uncertainties (for example, PDF uncertainties). You can encode uncertainties as varints using this algorithm: error[i] = int ((PDF[i]/ PDF[0]) * 10000). The integer array “error” keeps “deviations” from the central PDF value. Such value are close to 0, so the varint encoding will be very effective. For CT010, for example the array will have 52 integer values per event. Use the array “idata” in the ProMC.proto to fill such values.

You can view these files as

wget http://atlaswww.hep.anl.gov/asc/promc/download/browser_promc.jar java -cp browser_promc.jar probrowser.NLO http://mc.hep.anl.gov/asc/hepsim/events/pp/100tev/higgs_ttbar/httbar_0000000.promc

On the left panel, click on the event and then look at “Event info”. It shows the integer values (idata) that encode the PDF uncertainties, while the float array (“fdata”) shows other information. The first element in the float array is the weight of the event. The particle information is shown as usual (but without mother ID etc.).

Where ProMC is appropriate?

ProMC is used to store truth MC event records (about x2 more compact than compressed HEPMC files). ProMC is also used for Snowmass 2012-2013 to keep Delphes fast simulation files (including reconstructed jets and other objects). See the Snowmass web page. Also look at the MC fast simulation repository for Snowmass 2013.

Source code documentation

Use this Doxygen description to work with C++:

- annotated classes - for complete MC event records

- annotated classes - for NLO event records

ProMC Java API that is used to store data:

- ProMCHeader for MC and ProMCHeader for NLO

This is documentation for CPython:

- annotated classes - for complete MC event records

- annotated classes - for NLO event records

Benchmark tests

This table shows file sizes and access speed for 10,000 ttbar events at a 14 TeV colliders. The same information is stored in different file formats. The files for such benchmarks, generated using PYTHIA8, are located in the =HepSim= repository. Please take a look at the C++ code which shows how to fill such files. HEPMC and LHEF files were tested after the GZIP, BZIP2 and LZMA compression. It should be noted that the ProMC file includes a complete log file, particle data table, and ProMC file layouts; this information which is not included in other file formats.

The ProMC file is about 38% smaller than ROOT file based on Double32 type to store float values (like 4-momenta of particles) and INT type for integer values (id, status, mothers, etc.). The default ROOT compression is used. ProMC files are 60% smaller than compressed HEPMC and LHE files using LZMA and BZIP2 compression.

| File format | File Size (MB) | C++ (sec) | CPython (sec) | Java (sec) | Jython (sec) |

|---|---|---|---|---|---|

| ProMC | 307 | 15.8 | 980 | 11.7 (12.1 +JVM startup) | 33.3 (35 +JVM startup) |

| ROOT | 423 | 20.4 | 66.7 (PyROOT) | - | - |

| LHEF | 2472 | 84.7 | 30.4 | 9.0 (9.6 +JVM startup) | - |

| HEPMC | 2740 | 175.1 | - | - | - |

| LHEF (gzip) | 712 | - | - | - | - |

| LHEF(bzip2) | 552 | - | - | - | - |

| LHEF (lzma) | 513 | - | - | - | - |

| HEPMC (gzip) | 1021 | - | - | - | - |

| HEPMC (bzip2) | 837 | - | - | - | - |

| HEPMC (lzma) | 802 | - | - | - | - |

Table 1. Benchmark tests for reading files with 10,000 ttbar events stored in different file formats. For each test, the memory cache on Linux was cleared. In case of C++, the benchmark program reads complete event records using appropriate libraries. CPython code for ProMC file is implemented in pure CPython and does not use C++ binding (unlike PyROOT that uses C++ libraries). In case of LHEF files. JAVA and CPYTHON benchmarks only parse lines and tokenize the strings, without attempting to build an event record, therefore, such benchmarks may not be accurate while comparing with ProMC and ROOT.

Benchmark for the read speed was performed using a C++ code compiled on Intel(R) Xeon(R) CPU X5660 @ 2.80GHz. For these benchmark tests, the file is opened, and all entries with 4-momenta of particles were extracted. No any calculations are performed. As expected, the read speed of the ProMC file is 30% faster than for the ROOT files, and is substantially faster compared to the other formats. It can be seen that this difference in the read speed is roughly proportional to file sizes. Files after compression were not tested. A typical time for file decompression is 2-3 min. The JAVA Virtual Machine (JVM) processes data processes the ProMC files faster: this indicates that JVM creates more optimized code to deliver a better performance.

No significant difference in the write speed was detected (this test was dominated by event generation).

Files that have pile-up events are about 50-60% smaller than ROOT files, since soft particles (small values for momenta) are stored using varints with smaller number of bytes.

History

ProMC is a rewrite of an older package (CBook) for the community supported jHepWork. Currently, this program has the name DataMelt. The current ProMC version is based on HEvent record format Examples and zipious++ library which was first publicly available since 2008. (S.C.).

License

ProMCE is licensed by the GNU General Public License v3 or later. Please refer GPL-v3.0. It should be note that the project uses Protocol Buffers and ZIPIOS++ using same license.

This program is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3 of the License, or any later version. This program is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details.

The text of this manual cannot be freely redistributed and is subject to the Creative Commons Attribution-Share Alike License; either version 3.0 of the License, or any later version. See By-SA. You are free to copy, distribute, transmit, and adapt ProMC under the following conditions:

- Attribution. You must attribute the ProMC work i.e. by linking this web page.

- Non-commercial. You may not use this work for commercial purposes

- Share Alike. If you alter, transform, or build upon this work, you may distribute the resulting work only under the same, similar or a compatible license.

Ongoing work

- More benchmarks. Check compression for different pT scenario. (Memory usage, speed)

- Better buffering?

- Write interface for ROOT

Limitations of ProMC

Limitations are not detected so far. With zip64 is support (starting from ProMC v1.4), the number of entries can be arbitrary large. For zip64, you should run ProMC with the call:

ProMCBook* epbook = new ProMCBook("file.promc","w",true);

With this option, events are collected in the memory and writted after “close()” statement. Without last option, the number of entries limited to 65k (but events are written after each call).

ProMC can read files that are created using zip64 using Java, Python and C++ (as shown above).

How to cite ProMC data format

- S.V.Chekanov, “Next generation input-output data format for HEP using Google's protocol buffers”, ANL-HEP-CP-13-32, Snowmass 2013 Proceedings. arxiv.org:1306.6675

- S.V. Chekanov, E.May, K. Strand, P. Van Gemmeren, ProMC: Input-output data format for HEP applications using varint encoding, ANL-HEP-PR-13-41 arXiv:1311.1229. Computer Physics Communications 185 (2014), pp. 2629-2635

Bibtex entry:

@article{Chekanov20142629,

title = "ProMC: Input–output data format for \{HEP\} applications using varint encoding ",

journal = "Computer Physics Communications ",

volume = "185",

number = "10",

pages = "2629 - 2635",

year = "2014",

note = "",

issn = "0010-4655",

doi = "http://dx.doi.org/10.1016/j.cpc.2014.06.016",

url = "http://www.sciencedirect.com/science/article/pii/S0010465514002215",

author = "S.V. Chekanov and E. May and K. Strand and P. Van Gemmeren",

keywords = "Data",

keywords = "Format",

keywords = "\{IO\}",

keywords = "Input–output",

keywords = "\{LHC\} ",

abstract = "Abstract A new data format for Monte Carlo (MC) events, or any structural data, including experimental data, is discussed. The format is designed to store data in a compact binary form using variable-size integer encoding as implemented in the Google’s Protocol Buffers package. This approach is implemented in the ProMC library which produces smaller file sizes for \{MC\} records compared to the existing input–output libraries used in high-energy physics (HEP). Other important features of the proposed format are a separation of abstract data layouts from concrete programming implementations, self-description and random access. Data stored in ProMC files can be written, read and manipulated in a number of programming languages, such C++, JAVA, \{FORTRAN\} and PYTHON. "

}

— Sergei Chekanov 2013/03/11 21:45 — Sergei Chekanov 2013/05/03 12:58