Table of Contents

Benchmark tests

IO benchmarks using ProMC format

(E.May)

A number of IO tests are being performed on BlueGene/Q using Pythia8 and Pythia6 MC with the output to ProMC data format

A test to see if I can edit this page! - edmay A link to old Ed May webpages of Vesta work.

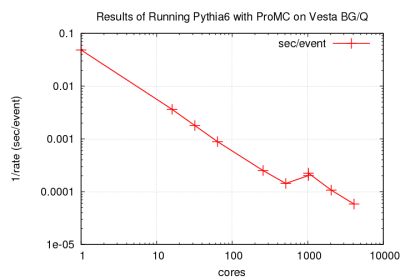

Some comments on data/plots of running Pythia6 with ProMC & ProBuf on BG/Q Vesta. This uses the Fortran code from the latest 1.1 tar file which simulates 5000 event per core for pp at 4on4 TeV. Except for the normalizing point at 1 core, all runs use 16 cores per node. Jobs were run through 4096 cores. This last point produced 20.5 M ev in 1197 sec (20 mins).

Fig 1

The first plot shows the (inverse) rate as a function of core. There is a noticiable jog at 1024 cores and above. I ran a second job at 1024 which reproducible result. I have no reasonable explanation for the observed behavior. I remark that some earlier studies at smaller number of cores showed this kind of behavior when mixing the number of nodes/cores down without event level i/o see http://www.hep.anl.gov/may/ALCF/index1.html.

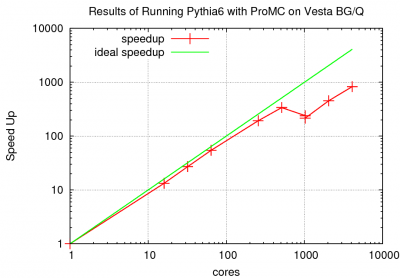

Fig 2

Plot 2 shows the data arranged as a speed-up presentation. Note the use of log scales which minimises the nonlinearity.

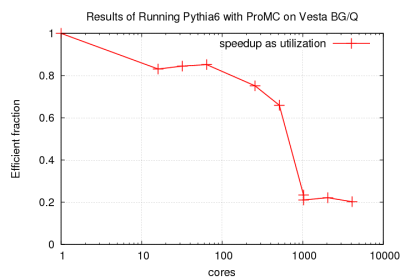

Fig 3

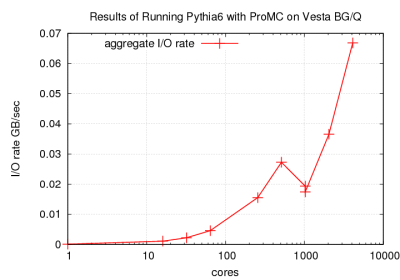

A more appropriate measure is shown in Plot 3 which is the effective utilization of the multiple cores. Above 100 cores the fraction begins to drop reaching only 20% for 1024 (and above) cores. This is really rather poor performance! The plot 5 shows the I/O performance which shows that the code is not really pushing the I/O capabilities of Vesta.

This plot shows the efficiency (R_1c / Nc / R_Nc) v. Nc, where

R == sec/event = job_run_time/total_number_of_events Nc == number of cores used in job

For a perfect speed-up this would always be 1. My experience with clusters of smaller size is that 80% is usually achievable while 20% is quite low and the usual interpretation is the code has high fraction of serialization. For this case it would be more efficient to run 8 jobs of 512 cores than 1 job of 4096. This of course is speculation on my part as I have not identified to cause of the inefficiency!

Fig 4

The ALCF experts suggested the I/O model of 1 directory and many files in that 1 directory would preform badly due to lock contention on the directory! Thus the example code was modified to use a model of 1 output promc data file per directory. Running the modified code produced the following figures: http://www.hep.anl.gov/may/ALCF/new.vesta.4up.pdf Vesta Plots Focusing on the 'Efficiency' plots there appears to be some (small) improvements both at low core numbers and a high core numbers: 80% rising to 90% and 20% rising to 40% respectively.

The large step between 512 to 1024 is still present!

As part of the bootcamp for MIRA the code was moved to the BG/Q Mira and a subset up the benchmarks were run in the new IO model. The results are shown in http://www.hep.anl.gov/may/ALCF/new.mira.4up.pdf Mira figures Again focusing on the Efficiency plot the results are very similar. This suggest when using this naive IO model of each mpi rank writing its own output ProMC file should be limited to jobs of 512 cores or less for good utilization of the machine and IO resouces. This is OK for Vesta where the minimum charging is 32 nodes (ie 512 cores). While on Mira the minimum is 512 nodes (ie 8192 cores) there is not a good match!

— Ed May 2014/02/04 — Ed May 2014/05/27

— Sergei Chekanov 2014/02/04 10:42