Table of Contents

ARGO (A Rapid Generator Omnibus) & Balsam

<html> <b>Authors:</b><br> J Taylor Childers (ANL HEP)<br> Tom Uram (ANL ALCF)<br> </html>

Description & Versions

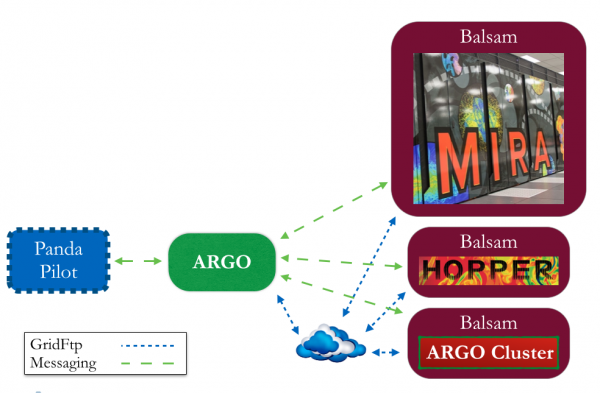

<html> <p>Balsam is an interface to a batch system's local scheduler. The each scheduler is abstracted such that Balsam remains scheduler independent.</p>

<p>ARGO is a workflow manager. ARGO can submit jobs to any system on which Balsam is running.</p>

<p>Both ARGO and Balsam are implemented in <a href=“http://www.python.org/” target=“_blank”>Python</a> as <a href=“http://www.djangoproject.com/” target=“_blank”>django</a> apps. This was done because <a href=“http://www.djangoproject.com/” target=“_blank”>django</a> provides some services by default that are needed such as database handling and web interfaces for monitoring job statuses. <a href=“http://www.djangoproject.com/” target=“_blank”>django</a> version 1.6 has been used and <a href=“http://www.python.org/” target=“_blank”>Python</a> 2.6.6 (with GCC 4.4.7 20120313 (Red Hat 4.4.7-3)).</p>

<p>The communication layer is handled using <a href=“http://www.rabbitmq.com/” target=“_blank”>RabbitMQ</a> and <a href=“http://github.com/pika/pika” target=“_blank”>pika</a>. <a href=“http://www.rabbitmq.com/” target=“_blank”>RabbitMQ</a> is a message queue system. Message queues were an easy alternative to writing a custom TCP/IP interface. This requires installing and running a <a href=“http://www.rabbitmq.com/” target=“_blank”>RabbitMQ</a> server. <a href=“http://www.rabbitmq.com/” target=“_blank”>RabbitMQ</a> 3.3.1 is used with Erlang R16B02.</p>

<p>The data transport is handled using <a href=“http://toolkit.globus.org/toolkit/docs/latest-stable/gridftp/” target=“_blank”>GridFTP</a>. This requires installing and running a <a href=“http://toolkit.globus.org/toolkit/docs/latest-stable/gridftp/” target=“_blank”>GridFTP</a> server. Globus version 5.2.0 is used.</p> </html>

Job Submission

<html>Jobs are submitted to ARGO via a <a href=“http://www.rabbitmq.com/” target=“_blank”>RabbitMQ</a> message queue. The messages use the python <a href=“http://docs.python.org/2/library/json.html” target=“_blank”>json</a> serialization format. An example submission is: </html>

- example_msg.txt

''' { "preprocess": null, "preprocess_args": null, "postprocess": null, "postprocess_args": null, "input_url":"gsiftp://www.gridftpserver.com/path/to/input/files", "output_url":"gsiftp://www.gridftpserver.com/path/to/output/files", "username": "bob", "email_address": "[email protected]", "jobs":[ { "executable": "zjetgen90_mpi", "executable_args": "alpout.input.0", "input_files": ["alpout.input.0","cteq6l1.tbl"], "nodes": 1, "num_evts": -1, "output_files": ["alpout.grid1","alpout.grid2"], "postprocess": null, "postprocess_args": null, "preprocess": null, "preprocess_args": null, "processes_per_node": 1, "scheduler_args": null, "wall_minutes": 60, "target_site": "argo_cluster" }, { "executable": "alpgenCombo.sh", "executable_args": "zjetgen90_mpi alpout.input.1 alpout.input.2 32", "input_files": ["alpout.input.1","alpout.input.2","cteq6l1.tbl","alpout.grid1","alpout.grid2"], "nodes": 2, "num_evts": -1, "output_files": ["alpout.unw","alpout_unw.par","directoryList_before.txt","directoryList_after.txt","alpgen_postsubmit.err","alpgen_postsubmit.out"], "postprocess": "alpgen_postsubmit.sh", "postprocess_args": "alpout", "preprocess": "alpgen_presubmit.sh", "preprocess_args": null, "processes_per_node": 32, "scheduler_args": "--mode=script", "wall_minutes": 60, "target_site": "vesta" } ] } '''

Installation

- On Mira:

soft add +python(to get python 2.7) - Install <html><a href=“http://pypi.python.org/pypi/virtualenv” target=“_blank”>virtualenv</a></html>

wget –no-check-certificate http://pypi.python.org/packages/source/v/virtualenv/virtualenv-13.1.0.tar.gz

- Create install directory:

mkdir /path/to/installation/argobalsam export INST_PATH=/path/to/installation/argobalsamcd $INST_PATH- Create virtual environment:

virtualenv argobalsam_env- On Edison:

module load virtualenvmodule load python/2.7

- Activate virtual environment:

. argobalsam_env/bin/activate- On Edison:

- To use

pipyou need a certificate so runmk_pip_cabundle.sh, then include--cert ~/.pip/cabundlein all your pip commands.

- Install needed software:

pip install django- if you have less than python-2.7 you need

pip install django==1.6.2

pip install southpip install pikapip install MySql(only if needed)- For this I had to install on SLC6

yum install mysql mysql-devel mysql-server

- Create django project:

django-admin.py startproject argobalsam cd argobalsamgit clone [email protected]:balsam.git argobalsam_gitmv argobalsam_git/* ./rm -rf argobalsam_git/- Update the following lines of

argobalsam/settings.py:- At the Top:

from site_settings.mira_settings import *

INSTALLED_APPS = ( 'django.contrib.admin', 'django.contrib.auth', 'django.contrib.contenttypes', 'django.contrib.sessions', 'django.contrib.messages', 'django.contrib.staticfiles', 'south', 'balsam_core', 'argo_core', )

- If you are using MySQL:

DATABASES = { 'default': { 'ENGINE': 'django.db.backends.mysql', 'NAME': 'your_table_name_goes_here', 'USER': 'your_login', 'PASSWORD': 'your_password', 'HOST': '127.0.0.1', 'PORT': '', 'CONN_MAX_AGE': 2000000, } }

- Setup your database:

- For Older Django using South (pre-1.7):

python manage.py syncdb

- Create the first migration

python manage.py schemamigration balsam_core --initial - Apply the first migration

python manage.py migrate balsam_core --fake - Create the first migration

python manage.py schemamigration argo_core --initial - Apply the first migration

python manage.py migrate argo_core --fake

- For newer Django (1.7,1.8):

python manage.py syncdb

- For Django (1.9+):

python manage.py migrate --fake-initial

Git Tag Notes

<html><a href=“https://trac.alcf.anl.gov/projects/balsam/browser” target=“_blank”>Git Browser</a></html>

5.0

- updated to MySQL database

- create filter website

- Added

group_identifierto ArgoDbEntry so that jobs can be grouped together and searched for easier.

4.1

- updated vesta balsam delays to 5 min

- making all settings files consistent.

- added code to deal with jobs after a crash, and added print statements

- added restart try of subprocesses in the service loop

- updated vesta settings

- Updated Balsam code to support tukey submit script with manual mpirun call. Mira handles the mpirun call for the user whereas tukey does not.

- added tukey balsam site to argo settings

- updated argo settings to reflect new install directory balsam_production

4.0

- adding condor command files which should have already been added. Fixed bugs with adding alpgen data to finished emails.

- adding logger.exception in the proper places. Fixed small bugs and added JobHold error

- Adding files for the condor scheduler changes to include dagman jobs and condor job files.

- Many updates to accomodate a Condor Scheduler ability to accept condor job files and condor dagman job files. Also includes updates to include more information on emails upon completion.

- fixing missing str() conversion

- fixed error messages where integers needed to be converted to strings

- updating only specific db fields instead of full object

3.2

- re-added JobStatusReceiver

3.1

- adding QueueMessage class to argo

- merging old and new?

- adding longer wait times to balsam on ascinode settings, and an error checking to the job status receiver.

3.0

- major change to ARGO so that each transition takes place in a subprocess and does not block argo from processing other jobs in parallel

- added error catching for job id mismatch