User Tools

Sidebar

This is an old revision of the document!

Table of Contents

ARGO (A Rapid Generator Omnibus) & Balsam

Authors:

J Taylor Childers (ANL HEP)

Tom Uram (ANL ALCF)

Description & Versions

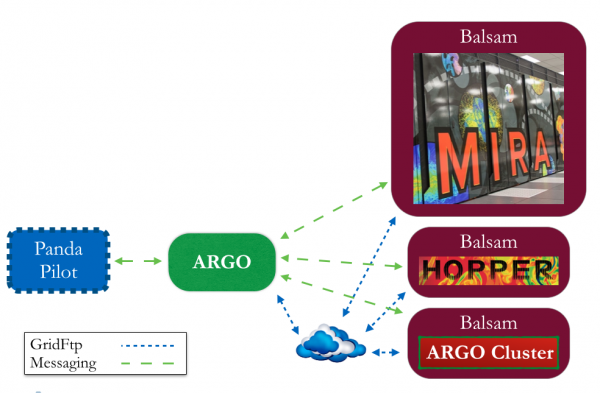

Balsam is an interface to a batch system's local scheduler. The each scheduler is abstracted such that Balsam remains scheduler independent.

ARGO is a workflow manager. ARGO can submit jobs to any system on which Balsam is running.

Both ARGO and Balsam are implemented in Python as django apps. This was done because django provides some services by default that are needed such as database handling and web interfaces for monitoring job statuses. django version 1.6 has been used and Python 2.6.6 (with GCC 4.4.7 20120313 (Red Hat 4.4.7-3)).

The communication layer is handled using RabbitMQ and pika. RabbitMQ is a message queue system. Message queues were an easy alternative to writing a custom TCP/IP interface. This requires installing and running a RabbitMQ server. RabbitMQ 3.3.1 is used with Erlang R16B02.

The data transport is handled using GridFTP. This requires installing and running a GridFTP server. Globus version 5.2.0 is used.

Job Submission

Jobs are submitted to ARGO via a RabbitMQ message queue. The messages use the python json serialization format. An example submission is:

- example_msg.txt

''' { "preprocess": null, "preprocess_args": null, "postprocess": null, "postprocess_args": null, "input_url":"gsiftp://www.gridftpserver.com/path/to/input/files", "output_url":"gsiftp://www.gridftpserver.com/path/to/output/files", "username": "bob", "email_address": "[email protected]", "jobs":[ { "executable": "zjetgen90_mpi", "executable_args": "alpout.input.0", "input_files": ["alpout.input.0","cteq6l1.tbl"], "nodes": 1, "num_evts": -1, "output_files": ["alpout.grid1","alpout.grid2"], "postprocess": null, "postprocess_args": null, "preprocess": null, "preprocess_args": null, "processes_per_node": 1, "scheduler_args": null, "wall_minutes": 60, "target_site": "argo_cluster" }, { "executable": "alpgenCombo.sh", "executable_args": "zjetgen90_mpi alpout.input.1 alpout.input.2 32", "input_files": ["alpout.input.1","alpout.input.2","cteq6l1.tbl","alpout.grid1","alpout.grid2"], "nodes": 2, "num_evts": -1, "output_files": ["alpout.unw","alpout_unw.par","directoryList_before.txt","directoryList_after.txt","alpgen_postsubmit.err","alpgen_postsubmit.out"], "postprocess": "alpgen_postsubmit.sh", "postprocess_args": "alpout", "preprocess": "alpgen_presubmit.sh", "preprocess_args": null, "processes_per_node": 32, "scheduler_args": "--mode=script", "wall_minutes": 60, "target_site": "vesta" } ] } '''

Installation

- Install virtualenv

- Create install directory:

mkdir /path/to/installation/argobalsam export INST_PATH=/path/to/installation/argobalsamcd $INST_PATH- Create virtual environment:

virtualenv argobalsam_env - Activate virtual environment:

. argobalsam_env/bin/activate - Install needed software:

pip install django==1.6.2(necessary for python 2.6, if you have 2.7 you can go to higher django version)pip install southpip install pika

- Create django project:

django-admin.py startproject argobalsam_deploy - Grab software from git repo:

git clone [email protected]:balsam.git argobalsam_deployYou may have to force git to write to an already existing folder, or checkout into one directory and move the files into theargobalsam_deploydirectory. - Update the following line of the

argobalsam_deploy/argobalsam_deploy/settings.pyfile to import the site-specific settings that are needed (may need a new one):from mira_settings import *

Git Tag Notes

- 4.1

- updated vesta balsam delays to 5 min

- making all settings files consistent.

- added code to deal with jobs after a crash, and added print statements

- added restart try of subprocesses in the service loop

- updated vesta settings

- Updated Balsam code to support tukey submit script with manual mpirun call. Mira handles the mpirun call for the user whereas tukey does not.

- added tukey balsam site to argo settings

- updated argo settings to reflect new install directory balsam_production

- 4.0

- adding condor command files which should have already been added. Fixed bugs with adding alpgen data to finished emails.

- adding logger.exception in the proper places. Fixed small bugs and added JobHold error

- Adding files for the condor scheduler changes to include dagman jobs and condor job files.

- Many updates to accomodate a Condor Scheduler ability to accept condor job files and condor dagman job files. Also includes updates to include more information on emails upon completion.

- fixing missing str() conversion

- fixed error messages where integers needed to be converted to strings

- updating only specific db fields instead of full object

- 3.2

- re-added JobStatusReceiver

- 3.1

- adding QueueMessage class to argo

- merging old and new?

- adding longer wait times to balsam on ascinode settings, and an error checking to the job status receiver.

- 3.0

- major change to ARGO so that each transition takes place in a subprocess and does not block argo from processing other jobs in parallel

- added error catching for job id mismatch

- 2.2

- moved postprocessing to thread

- grid proxy now valid for 96 hours

- fixed loop iteration for postprocess list

- fixed the postprocessing process tracking

- testing running post processing in a subthread

- adding a few more debug messages

- added more debug messages and removed channel/connection close in Message Interface. This should stop the WARNING messages from pika.

- added run_project to cobalt flags

- Added job ID to email

- removed fail current jobs on restart in balsam_service, and added logging to the service loop

- updated website with time

- update vesta_dev settings

- added subjob site to ls_jobs

- removed some debug statements from GridFtp

- updated mira queue to hadronsim

- added 2048 bits to certificate security grid-proxy-init call

- if job fails on sheduler status update, it runs the postprocessing

- added vesta_dev_settings

- added ability to specify balsam site explicitly

- removed debug from grid ftp

- fixes for condor

- added balsam site to balsamjob fixed response to holding state

- Adding site and fixing Holding state response.

- added failed to list of messages sent by balsam_service and added command to remove all jobs

- Updates to fix multiple job finished send message. Using Tom Urams excellent solution in the PreSave step :)